Convolutional variational autoencoder with PyMC3 and Keras¶

Last update: 5 November, 2016

- Taku Yoshioka

In this document, I will show how autoencoding variational Bayes (AEVB) works in PyMC3’s automatic differentiation variational inference (ADVI). The example here is borrowed from Keras example, where convolutional variational autoencoder is applied to the MNIST dataset. The network architecture of the encoder and decoder are completely same. However, PyMC3 allows us to define the probabilistic model, which combines the encoder and decoder, in the way by which other general probabilistic models (e.g., generalized linear models), rather than directly implementing of Monte Carlo sampling and the loss function as done in the Keras example. Thus I think the framework of AEVB in PyMC3 can be extended to more complex models such as latent dirichlet allocation.

NOTE: This notebook ran with my folk of PyMC3 https://github.com/taku-y/pymc3/tree/advi_refactor.

For using Keras with PyMC3, we need to choose Theano Theano as the backend of Keras.

In [1]:

%autosave 0

%matplotlib inline

import sys, os

sys.path.insert(0, os.path.expanduser('~/work/git/github/Theano/Theano'))

sys.path.insert(0, os.path.expanduser('~/work/git/github/taku-y/pymc3'))

os.environ['KERAS_BACKEND'] = 'theano'

from theano import config

config.floatX = 'float32'

config.optimizer = 'fast_compile'

from collections import OrderedDict

from keras.layers import InputLayer, BatchNormalization, Dense, Convolution2D, Deconvolution2D, Activation, Flatten, Reshape

import numpy as np

import pymc3 as pm

from pymc3.variational import advi_minibatch

from theano import shared, config, function, clone, pp

import theano.tensor as tt

import keras

import matplotlib

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import seaborn as sns

from keras import backend as K

K.set_image_dim_ordering('th')

Autosave disabled

Using Theano backend.

Load images¶

MNIST dataset can be obtained by scikit-learn API. The dataset contains images of digits.

In [2]:

from sklearn.datasets import fetch_mldata

mnist = fetch_mldata('MNIST original')

print(mnist.keys())

data = mnist['data'].reshape(-1, 1, 28, 28).astype('float32')

data /= np.max(data)

dict_keys(['data', 'COL_NAMES', 'DESCR', 'target'])

Use Keras¶

We define a utility function to get parameters from Keras models. Since we have set the backend to Theano, parameter objects are obtained as shared variables of Theano.

In the code, ‘updates’ are expected to include update objects

(dictionary of pairs of shared variables and update equation) of scaling

parameters of batch normalization. While not using batch normalization

in this example, if we want to use it, we need to pass these update

objects as an argument of theano.function() inside the PyMC3 ADVI

function. The current version of PyMC3 does not support it, it is easy

to modify (I want to send PR in future).

The learning phase below is used for Keras to known the learning phase, training or test. This information is important also for batch normalization.

In [3]:

from keras.models import Sequential

from keras.layers import Dense, BatchNormalization

def get_params(model):

"""Get parameters and updates from Keras model

"""

shared_in_updates = list()

params = list()

updates = dict()

for l in model.layers:

attrs = dir(l)

# Updates

if 'updates' in attrs:

updates.update(l.updates)

shared_in_updates += [e[0] for e in l.updates]

# Shared variables

for attr_str in attrs:

attr = getattr(l, attr_str)

if type(attr) is tt.sharedvar.TensorSharedVariable:

if attr is not model.get_input_at(0):

params.append(attr)

return list(set(params) - set(shared_in_updates)), updates

# This code is required when using BatchNormalization layer

keras.backend.theano_backend._LEARNING_PHASE = \

shared(np.uint8(1), name='keras_learning_phase')

Encoder and decoder¶

First, we define the convolutional neural network for encoder using Keras API. This function returns a CNN model given the shared variable representing observations (images of digits), the dimension of latent space, and the parameters of the model architecture.

In [4]:

def cnn_enc(xs, latent_dim, nb_filters=64, nb_conv=3, intermediate_dim=128):

"""Returns a CNN model of Keras.

Parameters

----------

xs : theano.tensor.sharedvar.TensorSharedVariable

Input tensor.

latent_dim : int

Dimension of latent vector.

"""

input_layer = InputLayer(input_tensor=xs,

batch_input_shape=xs.get_value().shape)

model = Sequential()

model.add(input_layer)

cp1 = {'border_mode': 'same', 'activation': 'relu'}

cp2 = {'border_mode': 'same', 'activation': 'relu', 'subsample': (2, 2)}

cp3 = {'border_mode': 'same', 'activation': 'relu', 'subsample': (1, 1)}

cp4 = cp3

model.add(Convolution2D(1, 2, 2, **cp1))

model.add(Convolution2D(nb_filters, 2, 2, **cp2))

model.add(Convolution2D(nb_filters, nb_conv, nb_conv, **cp3))

model.add(Convolution2D(nb_filters, nb_conv, nb_conv, **cp4))

model.add(Flatten())

model.add(Dense(intermediate_dim, activation='relu'))

model.add(Dense(2 * latent_dim))

return model

Then we define a utility class for encoders. This class does not depend

on the architecture of the encoder except for input shape (tensor4

for images), so we can use this class for various encoding networks.

In [5]:

class Encoder:

"""Encode observed images to variational parameters (mean/std of Gaussian).

Parameters

----------

xs : theano.tensor.sharedvar.TensorSharedVariable

Placeholder of input images.

dim_hidden : int

The number of hidden variables.

net : Function

Returns

"""

def __init__(self, xs, dim_hidden, net):

model = net(xs, dim_hidden)

self.model = model

self.xs = xs

self.out = model.get_output_at(-1)

self.means = self.out[:, :dim_hidden]

self.lstds = self.out[:, dim_hidden:]

self.params, self.updates = get_params(model)

self.enc_func = None

self.dim_hidden = dim_hidden

def _get_enc_func(self):

if self.enc_func is None:

xs = tt.tensor4()

means = clone(self.means, {self.xs: xs})

lstds = clone(self.lstds, {self.xs: xs})

self.enc_func = function([xs], [means, lstds])

return self.enc_func

def encode(self, xs):

# Used in test phase

keras.backend.theano_backend._LEARNING_PHASE.set_value(np.uint8(0))

enc_func = self._get_enc_func()

means, _ = enc_func(xs)

return means

def draw_samples(self, xs, n_samples=1):

"""Draw samples of hidden variables based on variational parameters encoded.

Parameters

----------

xs : numpy.ndarray, shape=(n_images, 1, height, width)

Images.

"""

# Used in test phase

keras.backend.theano_backend._LEARNING_PHASE.set_value(np.uint8(0))

enc_func = self._get_enc_func()

means, lstds = enc_func(xs)

means = np.repeat(means, n_samples, axis=0)

lstds = np.repeat(lstds, n_samples, axis=0)

ns = np.random.randn(len(xs) * n_samples, self.dim_hidden)

zs = means + np.exp(lstds) * ns

return ns

In a similar way, we define the decoding network and a utility class for decoders.

In [6]:

def cnn_dec(zs, minibatch_size, nb_filters=64, nb_conv=3, dim_hidden=100, output_shape=(1, 28, 28)):

"""Returns a CNN model of Keras.

Parameters

----------

zs : theano.tensor.var.TensorVariable

Input tensor.

"""

input_layer = InputLayer(input_tensor=zs,

batch_input_shape=zs.tag.test_value.shape)

model = Sequential()

model.add(input_layer)

model.add(Dense(dim_hidden, activation='relu'))

model.add(Dense(nb_filters * 14 * 14, activation='relu'))

cp1 = {'border_mode': 'same', 'activation': 'relu', 'subsample': (1, 1)}

cp2 = cp1

cp3 = {'border_mode': 'valid', 'activation': 'relu', 'subsample': (2, 2)}

cp4 = {'border_mode': 'valid', 'activation': 'sigmoid'}

output_shape_ = (minibatch_size, nb_filters, 14, 14)

model.add(Reshape(output_shape_[1:]))

model.add(Deconvolution2D(nb_filters, nb_conv, nb_conv, output_shape_, **cp1))

model.add(Deconvolution2D(nb_filters, nb_conv, nb_conv, output_shape_, **cp2))

output_shape_ = (minibatch_size, nb_filters, 29, 29)

model.add(Deconvolution2D(nb_filters, 2, 2, output_shape_, **cp3))

model.add(Convolution2D(1, 2, 2, **cp4))

return model

In [7]:

class Decoder:

"""Decode hidden variables to images.

Parameters

----------

zs : Theano tensor

Hidden variables.

"""

def __init__(self, zs, net):

model = net(zs, dim_hidden)

self.model = model

self.zs = zs

self.out = model.get_output_at(-1)

self.params, self.updates = get_params(model)

self.dec_func = None

def _get_dec_func(self):

if self.dec_func is None:

zs = tt.matrix()

xs = clone(self.out, {self.zs: zs})

self.dec_func = function([zs], xs)

return self.dec_func

def decode(self, zs):

"""Decode hidden variables to images.

An image consists of the mean parameters of the observation noise.

Parameters

----------

zs : numpy.ndarray, shape=(n_samples, dim_hidden)

Hidden variables.

"""

# Used in test phase

keras.backend.theano_backend._LEARNING_PHASE.set_value(np.uint8(0))

return self._get_dec_func()(zs)

Generative model¶

We can construct the generative model with PyMC3 API and the functions and classes defined above. We set the size of mini-batches to 100 and the dimension of the latent space to 2 for visualization.

In [8]:

# Constants

minibatch_size = 100

dim_hidden = 2

A placeholder of images is required to which mini-batches of images will

be placed in the ADVI inference. It is also the input to the encoder. In

the below, enc.model is a Keras model of the encoder network, thus

we can check the model architecture using the method summary().

In [9]:

# Placeholder of images

xs_t = shared(np.zeros((minibatch_size, 1, 28, 28)).astype('float32'), name='xs_t')

# Encoder

enc = Encoder(xs_t, dim_hidden, net=cnn_enc)

enc.model.summary()

____________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

====================================================================================================

input_1 (InputLayer) (100, 1, 28, 28) 0

____________________________________________________________________________________________________

convolution2d_1 (Convolution2D) (100, 1, 28, 28) 5 input_1[0][0]

____________________________________________________________________________________________________

convolution2d_2 (Convolution2D) (100, 64, 14, 14) 320 convolution2d_1[0][0]

____________________________________________________________________________________________________

convolution2d_3 (Convolution2D) (100, 64, 14, 14) 36928 convolution2d_2[0][0]

____________________________________________________________________________________________________

convolution2d_4 (Convolution2D) (100, 64, 14, 14) 36928 convolution2d_3[0][0]

____________________________________________________________________________________________________

flatten_1 (Flatten) (100, 12544) 0 convolution2d_4[0][0]

____________________________________________________________________________________________________

dense_1 (Dense) (100, 128) 1605760 flatten_1[0][0]

____________________________________________________________________________________________________

dense_2 (Dense) (100, 4) 516 dense_1[0][0]

====================================================================================================

Total params: 1680457

____________________________________________________________________________________________________

The probabilistic model involves only two random variables; latent variable \(\mathbf{z}\) and observation \(\mathbf{x}\). We put a Normal prior on \(\mathbf{z}\), decode the variational parameters of \(q(\mathbf{z}|\mathbf{x})\) and define the likelihood of the observation \(\mathbf{x}\).

In [10]:

with pm.Model() as model:

# Hidden variables

zs = pm.Normal('zs', mu=0, sd=1, shape=(minibatch_size, dim_hidden), dtype='float32')

# Decoder and its parameters

dec = Decoder(zs, net=cnn_dec)

# Observation model

xs_ = pm.Normal('xs_', mu=dec.out.ravel(), sd=1, observed=xs_t.ravel(), dtype='float32')

In the above definition of the generative model, we do not know how the

decoded variational parameters are passed to

\(q(\mathbf{z}|\mathbf{x})\). To do this, we will set the argument

local_RVs in the ADVI function of PyMC3.

In [11]:

local_RVs = OrderedDict({zs: ((enc.means, enc.lstds), len(data) / float(minibatch_size))})

This argument is a OrderedDict whose keys are random variables to

which the decoded variational parameters are set, zs in this model.

Each value of the dictionary contains two theano expressions

representing variational mean (enc.means) and log of standard

deviations (enc.lstds). In addition, a scaling constant

(len(data) / float(minibatch_size)) is required to compensate for

the size of mini-batches of the corresponding log probability terms in

the evidence lower bound (ELBO), the objective of the variational

inference.

The scaling constant for the observed random variables is set in the same way.

In [12]:

observed_RVs = OrderedDict({xs_: len(data) / float(minibatch_size)})

We can also check the architecture of the decoding network as for the encoding network.

In [13]:

dec.model.summary()

____________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

====================================================================================================

input_2 (InputLayer) (100, 2) 0

____________________________________________________________________________________________________

dense_3 (Dense) (100, 100) 300 input_2[0][0]

____________________________________________________________________________________________________

dense_4 (Dense) (100, 12544) 1266944 dense_3[0][0]

____________________________________________________________________________________________________

reshape_1 (Reshape) (100, 64, 14, 14) 0 dense_4[0][0]

____________________________________________________________________________________________________

deconvolution2d_1 (Deconvolution2(100, 64, 14, 14) 36928 reshape_1[0][0]

____________________________________________________________________________________________________

deconvolution2d_2 (Deconvolution2(100, 64, 14, 14) 36928 deconvolution2d_1[0][0]

____________________________________________________________________________________________________

deconvolution2d_3 (Deconvolution2(100, 64, 28, 28) 16448 deconvolution2d_2[0][0]

____________________________________________________________________________________________________

convolution2d_5 (Convolution2D) (100, 1, 27, 27) 257 deconvolution2d_3[0][0]

====================================================================================================

Total params: 1357805

____________________________________________________________________________________________________

Inference¶

To perform inference, we need to create generators of mini-batches and define the optimizer used for ADVI. The optimizer is a function that returns Theano parameter update object (dictionary).

In [14]:

# Mini-batches

def create_minibatch(data, minibatch_size):

rng = np.random.RandomState(0)

start_idx = 0

while True:

# Return random data samples of set size batchsize each iteration

ixs = rng.randint(data.shape[0], size=minibatch_size)

yield data[ixs]

minibatches = zip(create_minibatch(data, minibatch_size))

def adam(loss, param):

adam_ = keras.optimizers.Adam()

return adam_.get_updates(param, [], loss)

Let us execute ADVI function of PyMC3.

In [15]:

with model:

v_params = pm.variational.advi_minibatch(

n=500, minibatch_tensors=[xs_t], minibatches=minibatches,

local_RVs=local_RVs, observed_RVs=observed_RVs,

encoder_params=(enc.params + dec.params),

optimizer=adam

)

Iteration 0 [0%]: ELBO = -56970296.0

Iteration 50 [10%]: Average ELBO = -53106500.16

Iteration 100 [20%]: Average ELBO = -52194973.92

Iteration 150 [30%]: Average ELBO = -52064982.24

Iteration 200 [40%]: Average ELBO = -51975449.2

Iteration 250 [50%]: Average ELBO = -51931158.48

Iteration 300 [60%]: Average ELBO = -51887135.28

Iteration 350 [70%]: Average ELBO = -51885579.92

Iteration 400 [80%]: Average ELBO = -51846011.28

Iteration 450 [90%]: Average ELBO = -51832335.44

Finished [100%]: ELBO = -51903096.0

Results¶

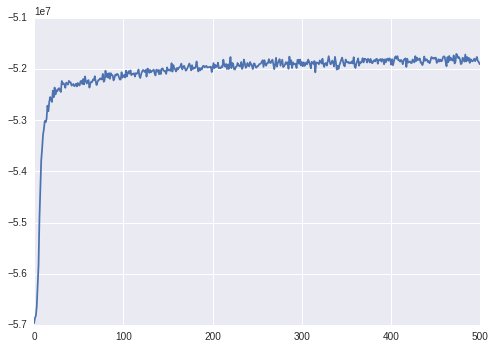

v_params, the returned value of the ADVI function, has the trace of

ELBO during inference (optimization). We can see the convergence of the

inference.

In [16]:

plt.plot(v_params.elbo_vals)

Out[16]:

[<matplotlib.lines.Line2D at 0x7faa20a4b748>]

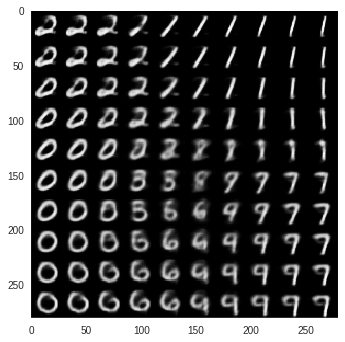

Finally, we see the distribution of the images in the latent space. To do this, we make 2-dimensional points in a grid and feed them into the decoding network. The mean of \(p(\mathbf{x}|\mathbf{z})\) is the image corresponding to the samples on the grid.

In [17]:

zs = np.array([(z1, z2)

for z1 in np.arange(-1, 1, 0.2)

for z2 in np.arange(-1, 1, 0.2)]).astype('float32')

xs = dec.decode(zs)[:, 0, :, :]

xs = np.bmat([[xs[i + j * 10] for i in range(10)] for j in range(10)])

matplotlib.rc('axes', **{'grid': False})

plt.imshow(xs, interpolation='none', cmap='gray')

Out[17]:

<matplotlib.image.AxesImage at 0x7faa1a963240>

In [ ]: